Free Fall

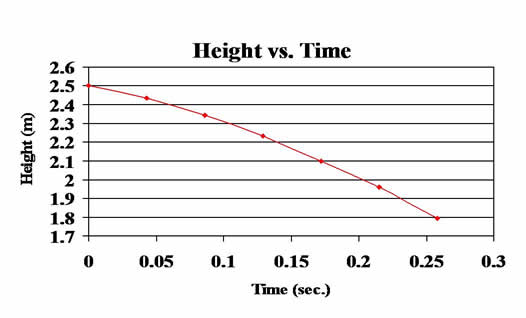

A ball is dropped and it’s height, in feet, and it’s corresponding time, in seconds, is recorded by a CBR motion detector.

| Time | 0 | 0.043 | 0.086 | 0.129 | 0.172 | 0.215 | 0.258 |

| Height | 2.5 | 2.436 | 2.345 | 2.233 | 2.1 | 1.959 | 1.795 |

Can we find a quadratic function to model this data?

How far does the object fall in t seconds?

The data “looks” like a parabola, so we will try to fit a quadratic model

Choosing three data points and substituting them into the quadratic model will generate a system of three linear equations in the variables a, b, and c.

The system can be reduced to this simpler system.